It’ll save your butt. Lots.

Bunny… things from Hokkaido.

For most data scientists, ad-hoc analysis requests are a fact of life. Even if your primary role is doing high level modeling/product development, there’s always lots of smaller analyses that you’l be churning out, either for yourself as groundwork for a bigger project or for someone else.

In such an environment, it is very easy to end up with a giant pile of analysis output that’s floating in a permanent “temp” directory with little context. “Quick little thing that doesn’t deserve to be checked in” you say, “It’s obvious where the data comes from”. I get it, it happens, I’ve said it myself a million times before. It never really ends well.

What’s a busy data scientist to do? Despite how much busy work it sounds like right now, you need to leave a paper trail that can clearly be traced all the way to raw data. Inevitably, someone will want you to re-run an analysis done 6 months ago so that they can update a report. Or someone will reach out and ask you if your specific definition of “active user” happened to include people who wore green hats. Unless you have a perfect memory, you won’t remember the details and have to go search for the answer.

TL;DR: Any analysis deliverable should always travel with documentation that acts to show the full path the analysis took, from raw data pull all the way to the deliverable, including queries and code, and links to previous analysis and raw data dumps if practical.

Analysis should be free-standing

In academia, when publishing a paper, we’re trained to cite our sources, lay out our reasoning, and (ideally) document our methods enough that a third party can reproduce our work and results. In writing code, we’re (again, ideally) supposed to write code with sufficient clarity supplemented with comments and documentation to allow other people (including your future self) to understand what is going on when they’re reviewing your code. The defining feature is that the final deliverable, be it code that gets checked in or a published paper, is packaged with the documentation. They live together and are consumed together.

This doesn’t normally happen for an analysis deliverable!

Analysis deliverables are often separated from the things used to generate it. Results are sent out in slide decks, a dashboard on a TV screen, or a chart pasted into an email, a single slide in a joint presentation for executives, or just random CSV dumps floating around in a file structure somewhere. The coupling between deliverable and source is non-existent unless we deliberately do something about it.

Package all the things!

But I check my SQL/Code into git already!

Sure, great for you. But when someone points to a chart you shared 6 months ago and asks “did you exclude internal users for this chart?” will have an answer for them? If not, will you be able to take that chart and trace back to the data query/ETL that generated the data for it to answer the question? It’s often best practice NOT to check large datasets into your git repo, so there’s always a disconnect between checked in code and resulting data artefacts.

This isn’t a question about there being a record “somewhere out there”, but whether the record readily accessible when it is needed, at any time and place when question is brought up, within seconds.

Cut to the chase, what are some example solutions?

Here’s a non-exhaustive list of ways to keep things together. Use what feels most natural for all stakeholders.

Excel files: Make a tab for your raw data dump, a tab for the query, a tab for the analysis. Put links/references if something is too big to fit.

CSV files: You’ll want to compress your data for sending/archival anyways, tar/zip/bz2/xz it up with your query.sql file, any processing code, etc.

Slide decks: Depending on audience and forum, citation on the slide itself, or appendix slides w/ links to analysis calculations/documentation, or docs linked in speaker’s notes

Dashboards: Tricky, links on the UI if feasible, or comments/links hidden within the code that generates the specific dashboard elements.

Email reports: Provide a link to a more detailed source, or a reference to the relevant data.

Jupyter/colab notebooks: Documentation should be woven into the code and notebook itself, there’s those text/html blocks for a reason.

Production models: Code comments and/or links pointing back to the original analysis the model stands on, or at least the analysis that generated any parameters.

Anything else I should do?

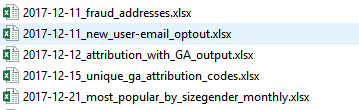

Date your files— Most analysis, especially ad-hoc ones, have context that is rooted in time — quarterly board meetings, release of a new feature, etc. Stuff from 2017 is usually less relevant in 2019. When all context is lost but someone can produce an email of an announcement around the time of deliverable was sent out, you have a date to go searching for.

My personal habit of dating analysis and deliverable files

Make queries that give the same result regardless of when they are run. Very often it’s tempting to do queries that just “pull everything” or “last 7 days”, but the one flaw they have is that the data changes depending on when you run the query, even 10 minutes later. This makes it impossible to reproduce the results of a query without modifying it, which is probably undesirable.

In some situations, it makes a lot of sense to make queries with dynamic time windows, and in others it’s not. Be conscious of your potential future use case (will people ask you to re-run it with updated data, etc) while making your decision.

It’s a chaotic world out there. Try to stay organized in your own tiny domain.