Dates, Times, Calendars— The Universal Source of Data Science Trauma

Some survival tips for all the crazy out there

A list of most of the major crazies out there, and survival tips

Just some clock in the city. (Photo: Randy Au)

There’s very few universals that all data people share. The collection of fields that we call “data science” is really broad, stretching from theoretical work in AI and Machine Learning, all the way to an individual data analyst being forced to do a pre/post analysis to “find the effect of a change” in Excel.

But there’s always been one topic that I’ve been able to bond with a fellow data person over: dates, times, and calendars. Start telling a story about some craziness you’ve found in your work and there’s instant empathy, and camaraderie. Everyone has been burned before. Badly.

So let’s talk about the good, the bad, the utterly insane, and some ways to deal with it.

Section list

ISO 8601 is Hope, ISO 8601 is Love

YYYY-MM-DDThh:mm:ss.sTZ (and many variations thereof)ISO 8601 is amazing and everyone should just use it by default when communicating dates and times. Full stop.

If every data export, data entry UI, handwritten form, past and present conformed to this one standard, we wouldn’t be in the freakish hellscape we find ourselves in today. It’s so important, this is the one ISO standard number I actually remember. There’s also a subreddit dedicated to it, Glory to ISO8601.

ISO 8601 has lots of pieces to it, but people typically recognize it for the YYYY-MM-DD date and hh:mm:ss.s time formats and the myriad variations that join the two to create date-times, express time zones, fractional seconds, etc.. There’s even more stuff in the standard that talks about things like time intervals and week numbers, but those aren’t used as much.

To be clear, the most important part about ISO 8601 is the order of the terms, it is unambiguous and easy to remember and parse. It’s this ease of understanding that makes it the preferred method of communicating dates and times. It also string sorts very nicely as a bonus.

When you output dates, do everyone a favor and output to one of the ISO 8601 standard outputs, do not invent your own format order. You’ll in turn thank the anonymous person on the other end one day when you have to parse their date outputs.

Love Your Libraries

When it comes to dates, times, and calendars, you absolutely do not want to reinvent the wheel. There’s tons of edge cases that are not immediately obvious. Don’t do it unless you have exhausted all possibilities of reusing someone else’s code.

There are plenty of libraries out there for your language of choice. I’m not just talking about the standard date/time libraries that may be built into your core language and help you do basic date calculations and output to specific formats. There are usually tools available to help you read and decipher formats without going insane.

For example, Python has dateparser, a great little library that attempts to parse most common date formats for you and convert them into datetime objects. It’s also got a bunch of other interesting features like handling of timezones, relative times (“1 minute ago”, etc) and some alternate calendars. Really cool stuff.

Maybe your language of choice has such a library. Make sure you look.

With the high points of sanity (what little there is) out of the way, let’s slowly descend into madness.

— Pain from Engineering/Fellow Data Folk —

This section is stuff we have the most control over. It’s stuff we, or our colleagues, build. It’s pain of our own making. For the most part, we can minimize the damage when we understand the issues and avoid bad practices. We can hurt others less and hopefully it comes back around.

DD/MM/YY vs MM/DD/YY vs YYYY/MM/DD etc.

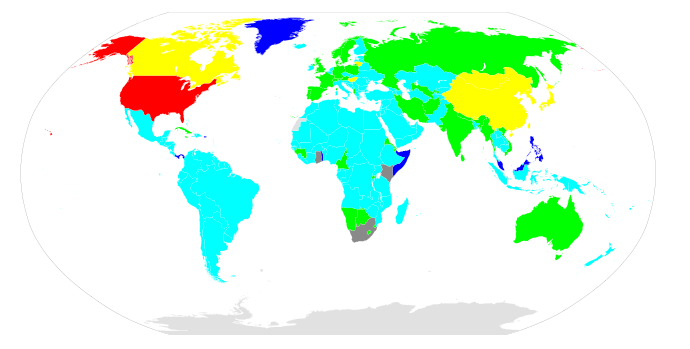

Cyan: DMY, Yellow: YMD, Magenta: MDY, Green: DMY/YMD, Blue:DMY/MDY, Red:MDY/YMD, Grey: MDY/YMD/DMY ( https://en.wikipedia.org/wiki/Date_format_by_country)

Just look at this map of date formats by country. Anyone’s who’s had to work with multiple countries, especially between the US (MDY) and Europe (DMY) has faced the dreaded “what does 8/5/14 mean?”.

You’d think this format would be irrelevant for us electronic data folk, but it’s not because you might be dealing with localized datetime stamps. Maybe you’re working w/ web scraping tools. I’ve gotten data exports from things that “helpfully” exported data according to my locale instead of something sane. I don’t know why they do that, it’s not helpful.

Data entered from various legacy systems and handwritten notes may use a legacy local format. Truly insane systems (like 2 disjoint systems slapped together after an acquisition) might even give you a mix of localized time stamps from different countries, so you’re left wondering which country a date comes from.

Especial shame callout to spreadsheets. Why the heck do spreadsheets all try to use one of these DD/MM/YYYY type formats?! Excel, Google Sheets, Libreoffice, ugh! On top of that they have the nerve to do it inconsistently on mass data imports!

Tips on dealing with this: There’s no choice here but to find out what the correct parsing scheme is for the data, sometimes by painful analysis and trial and error, other times by asking someone or reading documentation. The ideal is to convince the data source to output to something cleaner. Try to do that when you can.

12 vs 24 hour clocks

The 24hr clock format is most commonly found in logging and such, but 12hr formats are still common with human interfaces. When you have to parse/print data that’s intended for human consumption, you’ll have to deal with this issue.

Having to parse the various ways to write AM/P.M. is just ugly. Don’t forget it varies by locale too. Different countries will denote morning and afternoon differently. Consult your locale settings to see what is expected.

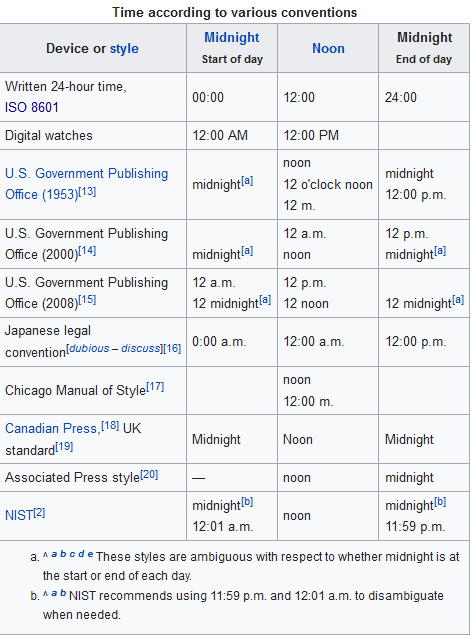

While we’re at it, did you know the naming of noon and midnight can vary depending on what convention you follow? Because of course it would.

https://en.wikipedia.org/wiki/12-hour_clock#Confusion_at_noon_and_midnight

Stick to using 24hr clocks when you generate output. Most humans can understand 24hr clocks just fine, so it’s not that big of a deal for data output. Save AM/PM for actual clock UIs.

Unix/POSIX time — It’s not too bad, but still flawed

Unix time is defined as seconds since the epoch of 1970–01–01T00:00:00Z. It has exactly 86,400 seconds per day and ignores leap seconds. It’s a smoothly increasing counter, which is a nice property to have. It means software that using unix time is unlikely to be surprised and have weird calendar related bugs. (We’ll return to leap seconds later because that’s a whole separate topic).

The problem with ignoring leap seconds for Unix time is how it accomplishes the ignoring… by reusing the same timestamp for 23:59:60 (the leap second) as 00:00:00 because it considers them equal. It means time difference calculations will be off by the number of leap seconds since the epoch unless you account for them (if you care). It will also of course make it seem like 2 seconds of activity happened within a second, it’s also irreversible data loss in that you can’t differentiate events from those 2 seconds from the time stamp alone. For human-scale usage this isn’t too crazy but those seconds can matter for analytics. For more sensitive applications, there are ways to smear the leap second across the day.

Unix time is very often stored as a 32bit integer, especially on older systems. This of course means that there’s the Year 2038 problem waiting when that integer overflows. Hopefully most systems will have switched to 64-bit integers by then, which can cover more seconds in it than the current age of the universe.

Despite the wonky handling of leap seconds, if for various reasons ISO 8601 is impractical to use for some reason, Unix time is a pretty good alternative, especially if the leap second is smeared. It’s a relatively compact 32 or 64 bit integer (or 10 character string) compared to 18+ character string for a compact ISO 8601, and generally well-behaved.

The main downside is that it’s not human readable, but most programming languages readily handle it. It’s an annoyance to constantly have to call unixtime_to_timestamp() type conversion functions, but I’ll gladly take it over many alternatives.

If you care about clocks and seconds and time, there’s apparently quite a bit of craziness involved in how POSIX chose to (not) handle leap seconds. This is an amazing read into the history of how it came to be that way.

Handling Milliseconds and Microseconds

Most time specs provide for fractional seconds fairly simply: add a decimal point and then the digits of the fractional amount: hh:mm:ss.sss for example.

The thing to know is that because floating point math on computers can have accuracy problems, the fractional numbers are usually internally represented using integers or similar structures, the decimal point is for display purposes.

One example of this is the NTP protocol actually uses a a fixed-point 64bit int to represent time. It uses the most significant 32 bits to represent seconds since the epoch, and the remaining 32 bits to represent the fractional seconds.

“The precision of this representation is about 200

picoseconds, which should be adequate for even the most exotic

requirements.” — RFC-1305, NTP v3 Specification

Since most systems don’t need 200 picoseconds of time representation, most systems don’t follow such a setup . Instead, you’ll more commonly see variants that extend glue the Unix time to an integer fractional second parameter with a dot, s.ssss. Another common format is to see time stored as a large integer called “milliseconds/microseconds since the (Unix) epoch”. This works because milliseconds and microseconds are 10⁻³ and 10 ⁻⁶ respectively, so it’s trivial to divide/multiply by the correct factor to convert back and forth with milliseconds_since_epoch/1000 = unixtime.milliseconds

Other Weird Epochs

Epochs are arbitrary constructs, so aside from the POSIX Epoch, you can come across many other epochs as you move between environments.

Most famous is probably Excel that starts in January 0, 1900 (yes Jan 0…) and counts days since the epoch start. But other interesting ones include GPS Time starting at 1980–01–06, and NTP’s epoch starting in 1900–01–01. Twitter started their twepoch w/ their first tweet.

99.99999% of the time, you can live life and do date math without thinking too hard about Epochs thanks to good libraries and interfaces (and how many things default down to unix time).

But occasionally you run into situations where it suddenly matters. I recently had to struggle with Excel’s time format because adding 1 hour to a timestamp involved adding a super intuitive 1/24 to a timestamp (because it’s keyed off DAYs).

Other cases where epochs matter is when you go beyond them (usually before them). For example, the TAI atomic scale has an epoch of 1961–01–01T20:00:00 (UT2), that’s when the atomic seconds we known as TAI essentially started accumulating. If you somehow extrapolate before the epoch, it raises questions about what the heck you’re actually using. What does it even mean if to mention a time at atomic-second scale for the year 1600? That’s effectively nonsense.

Parsing/Outputting Date/Times — dealing with strptime/strftime et al.

ISO8601 merely defines where should various parts of a date/time appear, in what manner. It’s not a language specification that defines the syntax for handling such matters. So, we have to deal with that problem as a separate issue.

I’m not aware of a standard way to represent parts of a date/time for output or parsing purposes. The closest thing to a standard comes from the POSIX and the C library based on it.

#The familiar %-based syntax we all know and constantly forget%Y-%m-%dIt’s not a hard “Standard” in the sense that the syntax can sometimes vary slightly across different sorts of systems due to some ambiguous bits in the POSIX standard, especially when mixed with various locale settings. But it’s the closest thing I could find.

The nice thing about the POSIX library is that it’s been battle tested to work. Since date/time code is so messy, few people want to reinvent the wheel. What winds up happening is that many languages that have roots in C and Unix use the format extensively because the C system API is available for use. Python, R, Ruby and Golang are like this, for example, and thus their date formatting strings are quite similar.

But not all languages use strftime! Java has its own SimpleDateFormat, Javascript has Intl.DateTimeFormat, C# has its own thing. They’re all very different from each other. Sometimes there’s facilitation by having a separate strftime option in the library, Perl for example has a native time format, but option to use time_strftime.

It’s generally a giant mess. Just look up the functions when you need them, especially if you’re going across different systems and languages. If you have to be familiar with one, the POSIX one is probably the most ubiquitous. But know that there is always something to use, and to never invent your own.

Clock Synchronization

Every clock is unique. They can beat slightly faster or slower than an exact duplicate clock sitting next to it. All clocks also are not perfectly stable. Clocks will drift out of sync due to a ton of influences including temperature, orientation, voltage, even relativity. This is true even for ultra precise and expensive atomic clocks, let alone the cheap crystal clocks on your motherboard that are many times less accurate.

Having one exact clock isn’t useful to the world, the most important thing about clocks is that clocks should agree to a high degree of accuracy. In the modern world of distributed systems, cloud computing, virtual machines, and internet of things, there are multiple clocks everywhere of varying qualities that need to agree on time.

Normally, as a data scientist, you’re not involved in the hard engineering problem of making sure every computer attached to your systems has an accurate clock. But you are likely to be one of the primary consumers of all that clock data and will be badly affected if those clocks go off the rails.

When clocks go awry, you lose the ability to say that event A happened before event B. Depending on how badly out of sync the clocks are, you can even see events and causality seemingly travel backwards in time. Databases like Cloud Spanner and CockroachDB spend a lot of effort (and in Spanner’s case, atomic clocks) to keep time accurate so that db events stay in proper temporal order (serializability and linearizability) for systems that can span the globe.

While most of us mortals don’t have to think about clock errors much, if you’re building database systems, or in a field that works at extremely fine time scales, or remote sensors that can have major clock errors, you’ll need to pay attention because clock errors can be extremely subtle and cause really weird bugs. It becomes important to think hard about just which clock is being used to set a timestamp (serverside vs clientside, etc).

Incidentally, computers have used the NTP protocol to sync clocks to each other for ages. NTP is generally considered able to get clocks within milliseconds of the time server (how many milliseconds, whether it’s 10ms or 100ms, depends on your network and setup). For even higher precision, there’s PTP which allows for additional (expensive) hardware clock assistance to boost performance (I commonly see references to clocks synced to within microseconds orb better).

— Pain from Nature —

This section is about things that are painful and complex simply because nature does not slot cleanly into human conventions. There’s not much we can do but live with what we have and make the best of it.

Leap Years

The Gregorian calendar is familiar, but the rules for leap years are more complicated than simply having a leap year every 4 years. There’s the 100/400 rule too.

Every year that is exactly divisible by four is a leap year, except for years that are exactly divisible by 100, but these centurial years are leap years if they are exactly divisible by 400. — Wikipedia

This is an annoyance to implement and easy to forget, just reuse a correctly implemented calendar module when handling dates please, even for simple things like incrementing a date into the future.

The calendar hasn’t changed in a few centuries, the existing code’s been running in stable production for years decades now.

Leap Seconds

Leap seconds exists because our atomic clocks are too accurate compared to our planet’s rotation. This makes life complicated.

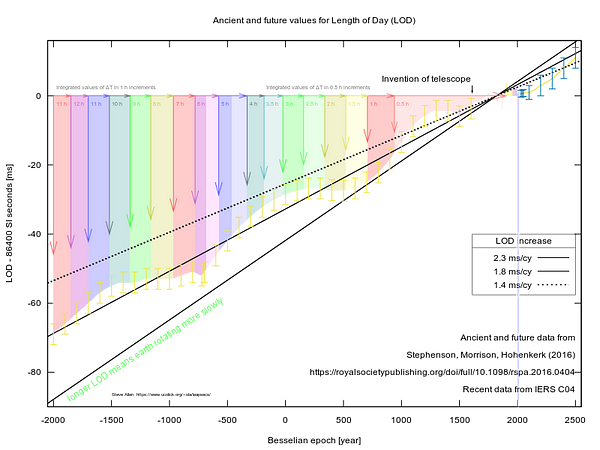

The short story to this fascinating rabbit hole of time is that the atomic timescale that uses SI seconds ticks at a slightly faster rate than the Earth’s rotation can put the sun in the same part of the sky at noon if we define the day to be exactly 86,400 seconds. Our everyday civil timescale (UTC) by virtue of using atomically defined SI seconds, beats slightly faster than the solar second (1/86,400th of a mean solar day, this is the UT1 timescale). Since civil time is based on the sun to being in the correct place in the sky at noon over Greenwich, whenever |UT1-UTC|> 0.9s, we deliberately lag UTC by adding 1 SI second and making a 86,401s day to keep the two timescales in sync.

There’s a similar protocol for if somehow the Earth rotates faster and we need 86,399s in a day, too. Apparently there have been instances where tectonics had noticably accelerated Earth’s rotation, but not enough to cross the 0.9s threshold to trigger a leap second. Imagine the insane bugs we would’ve found when 23:59:59 just did not exist for a day.

Changes of the length of day (ms difference per day) over history, colored bands are integral sums of 1 or 0.5hrs over the year

The main problem with leap seconds is that they’re not predictable. The Earth’s rotation is affected by tides, the moon, things going on in the Earth’s mantle and core, etc.. It’s a measured value. So, the only thing you can do is check the official bulletins for the leap second announcement.

In most applications, leap seconds are something we can safely ignore. Most date calculations are based around the difference in calendar day counts and then seconds are calculated based on 86,400s/day.

For example, there’s 3 days of time between the start Dec 31 and the start of Jan 3 (excluding Jan 3 itself). We’d count 3 calendar days, the 31st, 1st, and 2nd. We don’t normally say that there were 259,200 seconds (or 259,201 w/ a leap second) unless explicitly asked for that value. Losing 1 leap second would represent a 0.00038% error in that length of time.

For those instances where that’s important, there are lists of all the leap seconds, such as this one. With that you can go back and correct any affected data.

Leap Second Bugs

Leap seconds often cause unexpected bugs because the clock’s behavior can be unintuitive and it’s so rare people forget about them. One memorable instance that took down large chunks of the internet happened in 2012, where a June 30 leap second triggered bugs in the Linux kernel and Java that brought down a bunch of systems everywhere. I remember seeing the outage and the next day everyone was wary of oncoming leap seconds.

Despite that, bugs can still happen. In Jan 2017, Cloudflare’s DNS was also hit by one. This stuff is subtle, and uncommon enough that you probably don’t have tests to guard against it. So rest assured, every leap second, someone, somewhere, is having a bad night.

— Pain from Society —

This class of problems is probably the nastiest of them all. Society as a whole is full of contradictions, inconsistencies, and arbitrary rules. They also can change at any time, for any reason, technical standards be damned.

Non-Gregorian Calendars

Most of us live with the Gregorian calendar, which is nice because while we have to deal with things like leap years, we at least all agree to use the same calendar.

Until we don’t.

I’m not even talking about interesting alternative calendars like the Revised Julian Calendar that no one really uses, or the Persian/Hirji calendar used in Iran. There’s plenty of different calendars used throughout history that need to be reconciled. Historians and astronomers are most acutely aware of this since they’re the most likely of us to have to use records that stretches back centuries/millennia. The rest of us are lucky.

Instead I’m talking about artificial calendars used for retail/business purposes, like the 4–4–5 Calendar. These are in active use out in the world! I came across this while working at an e-commerce site that had to handle goods production and shipment, and so they used it to keep track of their factory work with it.

I still don’t fully grok 4–4–5, and rely on conversion functions/tables to deal with it.

Tips on dealing with this: Never, ever, try to reproduce the logic of an alternative calendar on your own without the EXACT algorithm. They are arcane! Writing your own is like inventing your own encryption algorithm. DO NOT DO IT. You will mess up. There must be a library that does this, even if it is for another language or just a lookup table. Use that existing source.

In fact, never try to implement your own calendar, even the Gregorian one.

Lunar… Anything

Lunar calendars (of which most of the ones used these days are lunisolar calendars) behaves very differently than the familiar solar calendar.

I’m of Chinese descent born in NYC. I grew up using the Gregorian calendar like everyone else. But at seemingly random points in the year that wouldn’t be clear until much later, my family would celebrate holidays on the lunar calendar. Some years the Lunar New Year is in early February, sometimes as late as March.

Why would this matter? Because some major holidays around the world are pegged to the moon. Chinese New Year is one, over a billion people go on holiday for over a week for it.

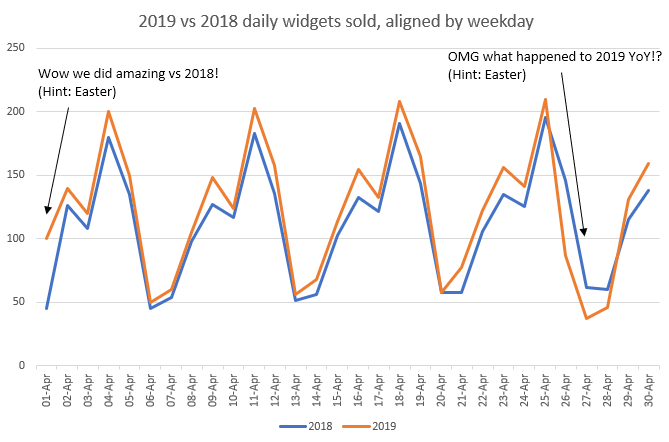

Not in Asia? Well there’s Easter, which is a major holiday in many countries with Christian influences. This stuff will cause all sorts of anomalies in your time series data.

Luckily there’s code and tables out there to figure out where these important dates are! You just need to know these things exist so that you can compensate.

Time Zones

No discussion about the insanity that is dates and times can not mention the special kind of insanity that is the concept of time zones. It takes all the complicated mess that is timekeeping (with leap seconds, leap days etc) and adds in a distinctly human (and political) element.

To put it briefly, avoid using local time like the plague. Because it will eventually fester into something that will hurt you. Store everything in UTC or something pegged to UTC like Unix time (no daylight savings time shenanigans, no governments changing their time rules). You can always display things in local time when necessary.

Why do we have to deal with them at all? Because of humans. Some analyses work better with local time, such as measuring breakfast activity. People use local time to coordinate their personal lives, but a nightmare for computers.

One of my favorite videos on YouTube, ever. The pain and frustration is real.

Time zones change fairly frequently. Not every time zone is an integer hour, India Standard Time is UTC+5:30, New Zealand’s Chatham Island Standard Time is UTC+12:45. Daylight Savings/Summer time rules can add/subtract an hour (or more), and may be observed in some regions or not. I’m not even sure I understand this situation where one location had two local times due to political reasons.

And if you ever want to keep abreast of all the changes to time zones in the world, you can download the timezone database, and subscribe to the announcements and discussions at the IANA. Things change surprisingly often.

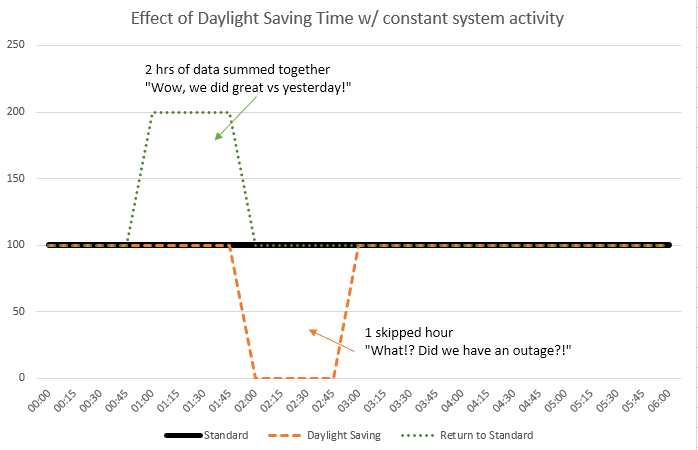

Daylight saving time, the destroyer of time series

Daylight Saving Time involves a whole country, or region, having their clocks change to another hour, typically (but not always!) done in 1 hour increments. It’s just a thing a group of people can decide to do. To make matters worse, governments can change the details of when it happens whenever they want. If you’re lucky they’ll announce it ahead of time so people can prepare for it. It’s a horrible thing to have to deal with.

What Daylight Saving shuffles look like to an analyst working in local time who forgets it happened

This is why it is considered best practice to always store data with timestamps that are in UTC, because 1) it does not have daylight saving time, and 2) it cannot at any point in the future decide to have daylight saving time.

The second point is important. Just because one place doesn’t have a daylight saving problem right now does not guarantee that it won’t have one in the future. Only UTC really carries that guarantee.

Holidays

Coming from a web analytics and ads background, one thing I’ve grown sensitive to are holidays in the markets I usually deal with (primarily the US). The primary reason is because web traffic, ads clicked, widgets sold, always drops when there’s a major holiday that affects the whole country.

Easter is especially a headache, because it’s determined by the position of the moon, meaning it’s on a different date every year, the Lunar New Year is the same way in China. I can’t count the number of times early in my career when a year-on-year or month-on-month report showed giant change numbers simply because Easter had rolled in either in the present or past. Now I know to check (and event send out warnings ahead of time if necessary) for it.

One extra frustrating thing about holidays is that they affect different things differently. Car traffic goes up if everyone travels home for holiday, but goes down if they stay put. Shopping for some things (like candy corn) goes up in specific seasons while other items aren’t seasonal. Your specific target demographic might celebrate something that the rest of the population doesn’t. It’s not only dependent on the outside world, but on what you care about.

How do you deal with this? About the only thing you can do is look at past behavior, think hard about your user base, and ask subject matter experts.

There’s probably more out there

One longish Medium post can possibly cover all the insanity of time and space. I’ve tried my best to cover the really big areas you should be mindful of, but the specifics are going to be up to you. If I’ve missed anything, let me know!

Thanks

To all the wonderful folk who gently nudged me into daring to write about this insanity.

You know who you are.

Reference material, links to cool stuff about time

For anyone who wants to dive further into the history of timekeeping and stuff, here’s a bunch of references I used as I dug into the rabbit hole of understanding how the heck unixtime dealt with leap seconds, and what leap seconds are

Wikipedia pages for UT, Unixtime, UTC, Earth’s rotation, Time formatting/storage bugs

A history of why the POSIX unix time standard came to be the way it was

A very deep list of time scales, more than just UT1 and UTC by Steve Allen

Much more stuff about time by Steve Allen w/r/t what abolishing leap seconds in UTC would mean

An explanation of timescales for PHP that finally made it click in my head

Where I found out how unix time (didn’t) handle leap seconds, another page explaining time for PHP

Chrono : Low level algorithms for date calculation — they start the calendar at March 1 to more elegantly handle leap years (leap years would tack a day onto the last day of the year instead of randomly inside it)

A list of holidays, only some tiny fraction of which will screw with you, until suddenly more do.

List of falsehoods programmers believe about time — Someone linked this to me partway through the writing of this, I think my examples cover a lot of these examples, but not everything

Did you know ntpd isn’t the only implementation of NTP out there? There’s also Chrony, and openntpd, which are designed for different needs.