Learning SQL 201: Optimizing Queries, Regardless of Platform

TL;DR: Disk is Frickin’ Slow. Network is Worse.

TL;DR: Disk is Frickin’ Slow. Network is Worse.

It’s hard to find a photo that says “optimization” okay? (Photo credit: Randy Au)

Caveat: As of this writing, I’ve used the following database-like systems in a production environment: MySQL, PostgreSQL, Hive, MapReduce on Hadoop, AWS Redshift, GCP BigQuery, in various mixes of on-prem/hybrid/cloud setups. My optimization knowledge largely stems from those. I’ll stick to strategies/thinking process here, but there are definitely features and quirks in other popular databases that I’m not familiar with, especially from SQL Server and Oracle.

This article is about speed, common strategies for making things go FASTER while avoiding specific implementation details. I’m trying to express the thought process of optimization, not the specific mechanics. Before I knew it, it’s turned into a monster of an article. There’s a lot to cover!

Intro and Background

Optimizing queries is a hard topic to write about because it involves specifics. Specifics about database engines, software, and sometimes even hardware and network architecture. I’ve been asked to write about this topic multiple times, and I’ve always resisted because I couldn’t see a way to write a generally useful article for something that very quickly gets into the weeds.

There are entire books written about how to optimize different database systems, which includes queries, but also details in the tuning of the systems themselves. They’re always written about a specific platform, not in general. It’s for good reason — every platform is different and the tuning parameters you need depend on your workload and setup (write heavy vs read heavy, SSDs vs Spinning disk, etc).

But on the way home during a nasty heatwave, I had an sudden flash of insight as to what threads tie optimization together. So I’m giving this crazy article a try. I’m going to avoid too many specifics and focus on the core thinking process that goes into identifying the things that will make your queries go faster. There will be forays into specifics only for illustrative purposes, and no real code examples. Also for brevity, I can’t be super thorough, but I’ll link to examples and further reading as I go.

Note: Throughout this article I will use the term “Database/DB” as shorthand to cover a huge variety of data stores with extremely different back-end architectures but present SQL-like interfaces. They could be your traditional SQL databases like MySQL/PostgreSQL, or a Hadoop based NoSQL store, or a NewSQL database like CockaroachDB.

Query optimization is about computers doing less work at query time

Making your queries fast boils down to making your queries do less work for the same results. There are many different strategies for achieving that goal, and it takes technical knowledge to know which strategy to employ.

Doing less work means understanding 2 things:

Know what your DB is doing

Know how to adjust what you’re commanding the DB to do, to do less work

The route to getting there is extremely dependent on the specific technical circumstances you’re working under. Optimizing a Hive query is in some ways the polar opposite of optimizing a PostgreSQL query. In fact, some querying strategies that speed one up can actually slow down the other.

What you’re actually doing when “optimizing a query” is finding ways to make your query workload play nicely with the hardware and algorithmic limitations of your system. It means the more familiar you are with hardware, software, and system peculiarities, the better you’ll be at it.

This is also why the topic is so difficult to teach, you can’t approach it as a kind of SQL style problem. The “rules” are just guidelines and there are often counter-examples. You also can’t ignore the base implementation of the system — while vendors will tell you you shouldn’t have to worry about implementation, it matters.

Here’s a list of the major points we’ll be hitting today, it starts off in the database and just expands outward. It has link jumps for your convenience.

I may have left out some cases (and you can always comment and tell me about things I’ve missed) but these are the big ones can think of.

Let’s get started.

Looking at query plans

When it comes to blog posts on the internet about optimizing SQL queries, using EXPLAIN is usually the first (sometimes the only…) thing you hear about. EXPLAIN is the SQL way to summon a query execution plan from your database. It is the database giving you a report on how it plans on executing your query — it will describe any indexes it’s using, the order of table joins, whether it will scan a whole table or not, sometimes even how many rows it expects to look at while doing the operation (cardinality).

The idea is, if you have access to this plan, you should be able to figure out (somehow) how to make your query faster. What’s frustrating is that very often people don’t tell you how the heck you’re supposed to go about that.

You’re looking for signs of work

It takes practice and patience to learn how to read a query execution plan that at first blush looks like gibberish. It’s made worse that there is no standard for what an execution plan looks like. It is HUGELY different for every database.

An example MySQL EXPLAIN (src: https://www.eversql.com/mysql-explain-example-explaining-mysql-explain-using-stackoverflow-data/)

An example PostgreSQL EXPLAIN (src: https://thoughtbot.com/blog/reading-an-explain-analyze-query-plan)

You seriously have to re-learn this skill for every database you use. You also have to learn where the quirks are (like sometimes MySQL EXPLAINs get the cardinality wrong and it gets misled because it makes weird assumptions about indexes).

Regardless of what database you’re working with, you are looking for where the database is doing a lot of work and spending a lot of time. Then you see if you can find a way to reduce it. Consult your specific DB’s documentation to see exactly what each statement means.

Signs of work

Table scans — Where the DB is reading every single entry of a table, generating lots of disk use (which is slow)

High cardinality operations — Whether there’s an index being used or not, when the DB has to work with a large amount of rows, it again means more disk and memory use and just takes time to go through all that

Loop-like operations over large numbers of records— Things like correlated subqueries where the DB has to do a separate subquery for a bunch of rows, much like a for-loop in general programming.

Writing to disk due to memory constraints —You find this both in RDBMS and Hadoop systems, when something becomes too big to fit in memory to process, it has to write down its work to disk to continue without crashing

Using the network — especially true for distributed systems where network traffic is almost required, it makes disks look fast in comparison.

“Disk” does come up a lot

Hopefully you noticed the word “disk” popping up. Because in general, disk access is very slow and is something you want to minimize. Even with SSDs being faster than spinning disk HDDs, they’re still orders of magnitude slower than RAM. This is why the first part of optimization usually involves finding ways to avoid using disk where possible, and to use it efficiently where you have no choice.

Sidebar: The speed of various components

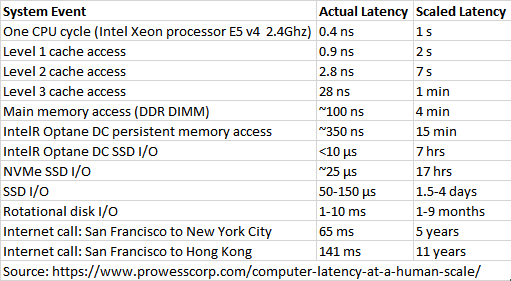

Comparison of access latency, data and scaling from https://www.prowesscorp.com/computer-latency-at-a-human-scale/

If you take a look at this comparison of CPU and RAM speeds vs SSD/HDD/Network ops, you’ll see why. RAM access is 3 orders of magnitude slower than CPU/L1/L2. Persistent Memory modules, which is essentially SSDs that sit on the DRAM bus instead of the SATA or SAS bus are still about 3x slower than standard RAM.

A more typical SSD, which we would consider a disk is 2–3 orders of magnitude slower then RAM, and spinning disk is in the milliseconds: 4 orders of magnitude slower than RAM. Networks are even worse at 5–6 orders of magnitude depending on conditions and the speed of light.

Reducing work: Indexes

Within this backdrop of “reducing work”, hopefully you can see why indexes come up so often. An index is essentially a pre-calculated map of the data, and more importantly it tells the database where on a disk to find certain entries. This means you don’t have to scan through the whole table to search for everything.

A full table scan can get ridiculously expensive because:

You’re reading a ton of data that might not be relevant to you (wasted effort)

You might be using spinning disks, which have really slow random access properties (SSDs can improve this quite a bit) and

The database files might be fragmented across the drive which makes the seeking penalty even worse (SSDs definitely help with this).

Going deeper into indexing though, you still need to know how your specific database uses indexes. For example, PostgreSQL has had the ability to use multiple indexes at the same time via bitmap scans since version 8. Meanwhile, MySQL, even in their version 8, only really has HASH and B-tree indexes in the default InnoDB storage engine and can’t compose indexes together.

Knowing such fiddly little details is actually important for optimization. In MySQL if you want to use 2 or more columns in an indexed query, you have to build a specific multi-column index using those columns, and the order matters. index(A,B) != index(B,A). If you had index(A,B), but need to access B quickly, you’d need at least a separate index(B).

Meanwhile, in PostgreSQL, it is more common and preferred practice to just have separate indexes: index(A) and index(B), because it can automatically use AND/OR logic to combine A and B on the fly thanks to bitmap scans. You can build a multicolumn index(A,B) and it will be more efficient, but you get much of the gains already by having just 2 separate indexes. Moreover, it scales better if you later need index(C), and index(D) that you want to compose.

Use the correct index types for your use case

Most database engines give you a variety of index algorithms to use when defining an index. The default is usually some variant of a B-Tree which works well for a large number of typical DB use cases. But you’ll often find things like R/radix-trees for spatial databases, or hash and full-text indexes. Sometimes there’s even more esoteric stuff available for more specific use cases.

Whatever it is, use the best one for your specific use case, using the wrong one is counter productive.

Index hinting

Sometimes you have an index, but for some reason the query plan doesn’t use it. MySQL is often made fun of for its fairly dumb query optimizer, so many advanced users sprinkle a lot of index hints, notably force index (idx) and straight_join, to force the optimizer to do the correct thing. What often happens is the optimizer decided it would be more efficient ignore and index and scan a table because it had an incorrect estimation of the cardinality of one of the tables it’s operating on.

Meanwhile, in PostgreSQL, index hinting is literally not a thing. Since an index hint is essentially a hack around the cardinality of certain tables, they’re liable to go stale as the database changes over time. Instead, the devs force you to rely on their query optimizer. Thankfully, the optimizer makes fewer mistakes than the MySQL one, so it’s not as crazy as it sounds, though you will occasionally hit edge cases that are hard to overcome.

Not every database has indexes, many things that run off Hadoop, like Hive and Spark for example, doesn’t have this concept because the underlying HDFS system is merely a distributed storage system of large files and the tools are mere data processors. You can artificially create something like an index using the file structure (most common is to use years/months/dates) but you have to essentially maintain it yourself.

Hopefully this above example can also illustrate for you how you being good at optimization means being very familiar with the particular tool you’re using.

Reducing work: Disk writes

Indexes are largely about reading things off disk. You pay a pre-calculation cost to maintain the index when data goes in, but reading it becomes much faster. So what about managing writing to disk?

— Hold up. Why are we even talking about writing to disk when we’re doing a SELECT operation you ask? Isn’t SELECTs mostly a reading operation?

Sadly, SELECTs aren’t just purely reading data because sometimes you need to write to disk to finish an operation. The easiest example is with Hadoop, where every step in the MapReduce process involves writing the data out to disk so that it can be transmitted to the next step (see an execution overview here).

Another fairly common scenario is when you are working with large data sets and the result sets don’t fit in the process’s main memory. The DB will then have to dump the contents to disk (whether it’s via OS swap or explicitly via to a file) to continue working. You’ll often notice this when a query that took 5 seconds to run w/ a small data set somehow takes 5000 seconds on a slightly larger dataset.

You’ll need to refer to your specific database documentation to figure out how to tell if your database is writing to disk, but when you find that it is happening, it’s often a candidate for optimization.

Use less data at a time

The only universal way to minimize disk writes in any system is to reduce the amount of data you’re handing at any given time. It will reduce the chances you’ll be forced to leave fast memory and fall into disk. Even in situations where you have to drop to disk, you’ll at least have less to write.

You should also be wary of operations that increase the row count, namely making joins where a single row can be joined onto multiple rows of data. Your database might have optimizations to filter down a giant CROSS JOIN if you give it conditions to work with, but if you don’t give it conditions to start with, you can create some monstrous data sets.

This also becomes even more important on a distributed data system such as Hadoop, individual worker nodes in the cluster will at some point need to transfer state and intermediate results to other worker nodes to continue working, and network traffic is even slower than spinning disk. You want to minimize this as much as you can, again by limiting how much data you are working with at any single point in time.

Do Work On the Side: Subqueries

A subquery effectively makes a temporary table of results within the database that lasts only for the query. A related feature that I’m lumping together here is the common table expression (CTE) is essentially a named subquery with nicer syntax that can be used multiple times within a query without repeating the execution.

Subqueries are interesting creatures. They can potentially make things faster, or slower, and you have to think quite hard and experiment to see whether they are appropriate to use as an optimization or not.

The thing to know about a subquery is that they are typically unindexed temporary table-like structures residing in memory. PostgreSQL won’t, but MySQL “may” try to materialize a subquery with a HASH index. Technically subqueries are unindexed in Hive and Spark too, but indexing isn’t really a thing in those systems so the point is a bit moot. It’s all just a heap of rows in memory or a table slowly spilled to disk. (It’s that word again, see where I’m going with this?)

CTE/materialized views can also stop the optimizer from exploiting index relations between the logic of the subquery and main query, acting like an optimization fence that the DB won’t cross. This behavior depends on the specifics of your database version and vendor and the details may or may not be desirable to you.

The part that makes subqueries a potential optimization is when the cost to use them to filter down a data source is less than using the data source raw. A table scan of 1K rows can be cheaper than scanning a 1M row index. You especially see this in the Hadoop ecosystem because there’s no indexing and MapReduce spills to disk all the time anyways. A well written subquery can filter down a data set and reduce subsequent operations throughout the rest of a query. This is also why you prefer to use these operations early on in a MapReduce job where you pay a disk and network cost every step of the way.

Meanwhile in a relational database, it is often much more efficient to use an existing index than to use an unindexed temporary structure. Doubly so if the subquery is so big that it has to go to disk. So you would use them more carefully, and try to keep their size small when you do.

Reducing Inefficient/Repetitive Work: Temporary Tables

Continuing from the discussion about subqueries, what if the subquery was unavoidable but is impossibly slow? There are further things you can try to optimize — use temporary tables with an index.

Remember that the main flaw of a subquery was that they (largely) aren’t indexed. If only we could put an index on them, we’d be golden. The solution for this is to make a temporary table and put the index you need on it. Then we’ll have a proper index (usually B-tree or similar) that supports many of the comparison operators we want.

Another potential advantage of building a temporary table is that it is literally 1 keyword away from being a permanent table. CREATE TABLE vs CREATE TEMPORARY TABLE. If you’re going to reuse the structure multiple times across transactions, it could be worth it.

But what’s the catch? What’s the cost of using a temp table?

One is simply permissions, you need to be able to run the CREATE command and may not have that level of access. It can be a server security/reliability issue, especially in a production environment.

Second drawback is that once you have this intermediate table in your system, you would have to maintain it so that it isn’t stale. As people start relying on the table, you might get additional demands to maintain it, or update it more often. That’s all overhead and dev time.

Finally, because you’re making a table, you are explicitly writing to disk. I’ve already hammered on how that’s a slow operation that you don’t want to do repeatedly. Your DB may have the option to create the temp table within memory instead of disk, but if it gets too big you’re back to disk. So you only want to use this option when you’re going to already write to disk anyway, so that the cost of writing to disk and making an index is largely negligible. You may also do this if you’re going to reuse the table a lot, making it cost less to do it once instead of repeatedly.

Doing less work on the network

If there’s one thing slower than spinning hard disks, it’s network connections. If you have a distributed system that needs to coordinate with a server on the other side of the continent or planet, you literally are bound by the speed of light as well as network congestion.

On top of that, the bandwidth of a network link is usually smaller than the bandwidth of a hard drive to a CPU, which makes things run even slower. Also, network TCP overhead is a thing that surprisingly matters quite a bit.

You might think that this is a problem for people with complicated distributed systems. It’s true that they factor more for a big Hadoop cluster than a single hosted server, but it does come up occasionally for even single server installations. As you scale out, your database is going to live separately from your application, or your analysis server.

I once had a simple ad-hoc query turned regular dashboard, select a handful of fields from a large table, simple SELECT foo FROM TABLE. It was easy enough to pull the data to another server to run ingest into pandas and do the grouping/pivoting there for presentation. There were indexes and everything, but it was still relatively slow to respond.

When I dug deeper, it became clear that a lot of the latency was in the part where the database was preparing and sending many megabytes of output over the wire to where the analysis was happening. The easiest way to speed things up was to shift some of the analysis logic into the SQL query itself so that less raw data had to be sent over the wire.

Minimizing Algorithmic Work: Use Less CPU

For the vast majority of this article, I’ve been talking about I/O, because I/O is typically where the biggest source of slowness is and where you can find the most low hanging fruit. But we’re not done! Computers have other things that can be slow, like the actual computing part itself!

Databases are not magical systems of computation, they’re bound by the same algorithmic constraints as any program you write yourself. They can’t sort any faster than the best known sorting algorithms (and since they’re general purpose, they probably don’t use the ideal one for your specific situation either). That means that the specific operations and functions you call can have a significant effect on performance.

Just remember, as with everything complicated and real-world, there are no panaceas. I can’t give you a hard and fast rule about what to do/not do. You’re going to have to think hard and actually test performance to find out what works for your specific setup.

Text matching functions

Handling text in any significant volume is always computationally intensive work. No matter what you do, if you have to scan through a whole string character by character, it’s an O(n) operation on the length of the string.

So operators such as LIKE with % wildcards and case insensitivity are somewhat intensive operations. They also can have interesting interactions with indexes. The other string functions, split, substr, concat, etc. all use similar algorithms and so aren’t much better in many cases. Regexes are even more expensive to use because they’re much more complex. So the more you use these functions, the slower everything gets.

On top of pure computational complexity, strings are probably the largest things you’ll find actually stored within a database. This is assuming you use best practices and don’t store large BLOBs in your DB. If you’re using a unicode encoding, every single character could potentially be 1,2,3, or even 4 bytes of data depending on specifics. Text fields can hold potentially megabytes worth of text. All this means we’ll be running out of RAM sooner.

Analytical and Window functions

Window functions are awesome, coming out of a legacy MySQL 5.4 codebase way back in the day (which didn’t have window functions) and moving to Postgres 9.4 was an eye opener. Window functions can do things like calculate percentiles or lags which used to be extremely difficult and/or very join/subquery heavy to do without. In that aspect, window functions tend to be a massive performance boost over not having them.

That said, they’re not magic. All the extra storing of array values and sorting is, again, computational work. They can be wasteful unless you’re careful about details like sorts and indexes and the size of the window. Optimizing them tends to involve experimentation and deep reading into query plans. But it’s doable, with examples like these.

Reducing Transaction Costs: Avoiding Overhead

Overhead is rarely talked about to beginners, and even intermediate users of systems. For whatever reason, it’s considered deep nerdy voodoo because it’s bound up in the “the guts of the system”, in the meta layer of how the system works. But when you start looking for it, you start realizing that overhead is everywhere.

Practically everything we do with computers involves a certain amount of overhead, essentially work that’s expended in preparation, and maintenance, of whatever it is we’re “really doing”. We’ve covered a lot of things already that can be considered overhead depending on your perspective.

As I previously mentioned, network overhead can be significant and we want to avoid it where we can by transferring less stuff over the network. It will eat into your maximum transfer bandwidth and isn’t a specific DB issue. It’s significant enough that Google had proposed and released a network protocol, QUIC that boosts network performance by avoiding some of the overhead associated with TCP (and nearby parts of the network stack) by using UDP.

There’s also the “overhead” of spinning up a disk and seeking to the correct sector to read data out, which we see as the slow seek times of a HDD and why most systems are moving to SSDs. It’s also partly why we’re trying like hell to avoid disk operations in this whole article.

Similarly, the database has lots of overhead to deal with itself. When it accepts a connection, opens up a transaction, commits a transaction, all that stuff takes time to do.

How much could this overhead possibly be, you ask? Well, one extremely common optimization that comes up is uploading large amounts of data to a database. It is almost always the case that you want to avoid making multiple small INSERTs because the DB bogs down under the load. Even if you’re doing things all within a single transaction with many INSERTs, it still has to constantly spin up a query interpreter to handle each query call.

Instead, it’s best practice to create one giant file (CSV or whatever) and batch load the data all at once, even if you send the file over the network via the query. This is multiple orders of magnitude faster. It’s utterly insane and mind blowing the first time you encounter it. But it makes total sense because for a small single-row insert, the connection/transaction handling time is more than it takes to process that insert.

The same overhead issue also can apply to running a bunch of SELECT statements. There’s still overhead involved there too. If you keep hammering a database with lots of small requests, it will eventually bog down the whole sever. You’re going to want to either pull more data and process it locally, or consider using some kind of caching.

Caches, caches everywhere

One technique that applications actually use to increase app responsiveness and reduce DB load is to simply cache the results for queries for a period of time, so that repeated calls skip the DB entirely and just give the cached result. This is app-level stuff, and isn’t specifically an optimization for your SQL query, but it’s a common enough pattern for responsiveness that you might actually see it on some analytics tools you use.

At the SQL query level however, caching is still a thing to be aware of. Multiple runs of the exact same query in quick succession can actually yield different run times even if the database is not caching the result. This is because the first query primes the disk cache and OS caches with data. It’s not likely to be your whole data set, but in narrow instances it’s a significant (and temporary) speed boost. This can affect any benchmarking you do if you’re new to the whole benchmarking thing.

In fact, while you’re not usually aware of it, databases, and the OSes they run on, will use caching techniques to squeeze extra performance out. Here’s a post about Postgresql’s caching behavior, and broadly speaking other databases will do similar things to different degrees.

Playing well with others: Contention

As an end user just firing off queries, it’s very easy to forget that databases are multi-user systems. They generally handle things so quickly and transparently, you never really know that there are usually other people hitting the server at the same time. But as you push your server to its operational limits you can run into cases where queries from other users, or queries from your own parallel jobs, will start to have an effect on you.

General load scheduling

Machine usage follows human activity patterns, and so there are certain portions of the day that are busier than others. As reports and database queries start becoming automated, the timing of large batch requests becomes increasingly important. Overnight jobs are very commonly set to run at very similar times in the night (very often around midnight-ish), because everyone individually thinks the servers will be “empty” then. Except they aren’t and now the system has to task switch between 10 batch queries all running at the same time.

This is merely a resource scheduling issue, but I’ve seen batch jobs that take only 5–10 minutes to run on a no-load server stretch to become 30min+ because they were competing for resources with a bunch of other jobs.

Locking

General load is something of an annoyance because it makes things unnecessarily slow, but a more serious issue with contention can arise via locking. Databases want to stay consistent, and that sometimes means entire tables can be locked when certain operations are run, such as a big update/write. Whether it’s a row-level lock or a table-level lock depends on specific details, but in either case, if you have another query that needs to touch those the locked rows, they simply are not allowed to run until the lock is lifted.

Locks can sometimes turn into pathological situations known as a deadlock, but that’s rare. More commonly it can make a traffic-jam of queries that seem to take forever simply because they’re waiting. Another less common effect is that locks can affect things like server replication, sometimes even taking data replication down, meaning no new data updates into/out of the server and causing all sorts of unintended side effects. (My deep apologies to the devops folk I’ve broken replication for over the years with my ultra-long analytics queries).

Finally, the Non-Answer: Throw Money at the Problem

This whole article has been dedicated to making queries go fast. So it would be wrong to ignore the ultimate answer to speed — more hardware and/or better software, aka, throwing money at the problem. It’s our last resort, not the first, but it can’t be ignored.

Sometimes, despite all your best efforts at optimizing your queries to make things go faster, you smash into limitations in I/O and sometimes CPU that are insurmountable with the systems you have. In that case, there is only one thing left to do to make things to faster: throw money at it. Re-architect your systems, get more memory, bigger RAID arrays, faster disks, a faster CPU, faster NIC and interconnects, and when all that isn’t enough, scale it out via clustering. I’m not even joking, Facebook at one point had scaled their MySQL setup to an utterly insane level. If clustering is somehow not enough, redesign your entire app system architecture for the speed you need, not just the DB architecture.

Our goal in optimizing our queries and databases is to squeeze as much performance out of our equipment as possible, to get the best performance for every precious dollar spent on a system. It’s very easy to lose sight of the big picture and laugh at the idea of “if you can’t make it faster, buy more equipment”. But we need to admit that there is inevitably a hard limit to optimization, even when it’s a really high limit, but there does exist a point of diminishing returns. A perfectly tuned and modified Miata will never reach the same speeds as an F1 car.

The final skill in query optimization is learning when you’ve reached the point where all the viable optimizations have been done, when the trade-offs made for speed are more expensive than it costs to upgrade. It’s hard because it’s a process of elimination, the proving of a negative “we can’t do more here”.

But if you ever do find yourself in such a situation, then go out and upgrade the system so that you can get back to optimizing.

Interesting links I’ve found while writing this

In no particular order:

Use the Index, Luke — DB performance for developers, lots of little details about performance and behaviors of different bits of a database

Myths about hardware and server performance — lots of tales about how throwing hardware at a problem is NOT the solution

Caching in PostgreSQL — goes into detail about caching strategies that PostgreSQL uses to deliver results faster and how caching interacts w/ other functions

A tale of query optimization — someone documenting their journey into optimizing a query, with lots of twists and turns, tracing down ultimately to an array comparison && operator