Not everyone needs real-time analytics, including you

The art is finding a good cadence for your metrics

The art is finding a good cadence for your metrics

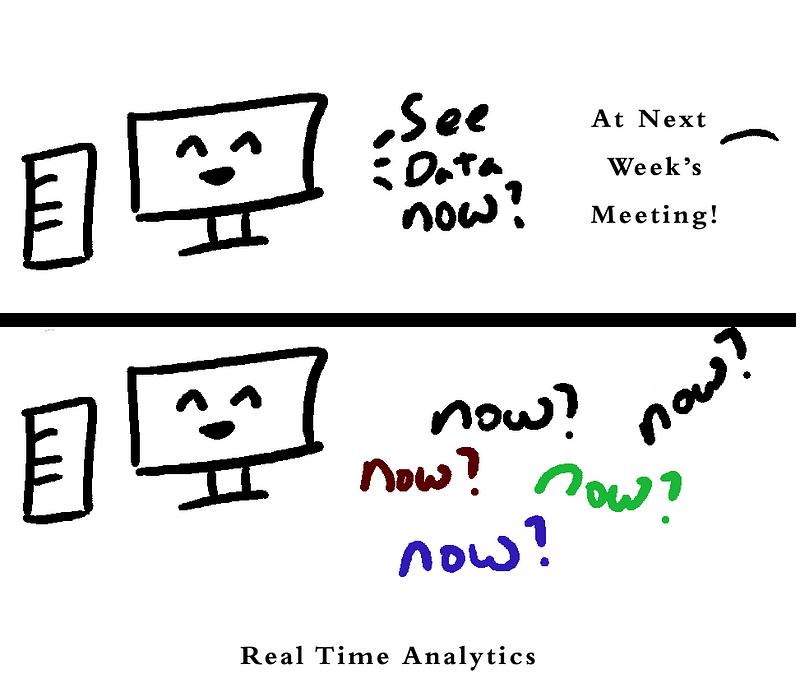

“Real-time analytics” is a sexy term that you hear every so often, slightly more often nowadays than a decade ago. Get data and make decisions on it in “real time” says the marketing copy, who wouldn’t want that? But in my experience, for the vast majority of users, real-time analytics is NOT the solution that people actually need. The ROI is just not there until the organization is at a certain point.

Most people can live with significantly relaxed constraints on query execution time and reporting latency by quite a bit, saving on a ton of cost and infrastructure, and not sacrificing much of anything besides bragging rights.

What’s the appeal?

There’s lots of marketing out there that makes it seem like if you don’t have all your data all the time you’re missing out and will be left behind by a world that’s doing AI every millisecond. As usual, marketers will do their marketing, and we should be skeptical of whatever it is they’re hawking.

Still, the basic premise is simple and compelling to beginning: “We need data/analytics/information to make decisions. There’s no telling when we’ll need this data to decide, so let’s make sure we have it ALL THE TIME.” There’s also an implication that magical AI/ML sauce can be sprinkled on top of such systems to “transform your business”.

But all things come at a price, so what’s the price we’d be paying and what are we getting? Is this for me?

As the saying goes: Good, Fast, Cheap. Pick two.

To start, analytics infrastructure isn’t free, so the usual trade-offs apply.

Good (Quality and Reliability)

Good analytics, in a broad sense, means that the analysis you can do and the results you get are good. At a fundamental level, it encompasses a number of things:

Good data collection — you’re confident that the data going into the analytics system is of good quality, no under/over counting, no weird biases, no bugs and weird missing data

Good analytics tools — not all analyses make sense for all data, but the ones that you have access to are relevant to you, whether it’s A/B testing output, or retention/cohort analysis, etc. These can range from simple to extremely complex, but either way you have confidence they’re doing the Right Thing™

Fast (Latency)

Here, I’m talking about “Fast with respect to how the analytics system operates/gives results”. You could potentially argue that there’s also a “Fast, as in development time” component, but in this framework I consider that a and cost and cover it in the next section.

The general rule of thumb is that the faster you want the analytical turnaround, the more compromises you’re going to be forced to make. There are practical limits to the finite computing power we have access to, there’s limitations of hardware to store, move and process large volumes of data. The only way around that is to use more complicated methods, do a lot of pre-processing, caching, maybe even using bespoke systems to get the performance you want.

Cheap ($$$$)

Doing work to build out a system has lots of costs involved, from developer time and the opportunity cost associated with that, to the raw cost of the systems and software you need to run your analytics. Replacing existing systems is even more expensive, with refactoring, testing, and lots of stress all around.

Since no one should be compromising (much) on the “Good” part because no one willingly uses a system where they’re wondering if the numbers are accurate. So in the end it boils down to trading speed and cost.

But I just KNOW I need real-time analytics!

And you could be correct. Is everyone complaining that they need something to make a decision and it’s not ready? Have avoidable mistakes been made because you couldn’t get your data ready in time? Then you should look into upgrading your infrastructure to something with less latency. It doesn’t have to be a full-on big data lambda architecture stack of $$$. But something.

If (as is more common) the bottleneck is on people sitting down and consuming the data to make decisions. Or just the decision-making process itself is slow? Then it’s a lot less likely.

The best time to have data and analytics is when you’re making a decision.

I might even go so far as to say that decision-making time (or in the preparation to deciding) is the most important time you need data analytics available. Notifications from metrics when your systems break is another critical time to have them available (arguably you need to make a decision then too). Just about everything else is a nice-to-have. I feel this is the key to deciding how to balance the good, fast, cheap equation.

They key is knowing and identifying what the timing of decision-making and building your metrics infrastructure to meet those needs. If something changes in your system and you NEED to respond within 5 minutes (or 5 milliseconds), your analytics has to move faster than that to give you time to react. For such a situation, having the low data analytics latency is of value to you, and you’ll want to invest in systems to keep things speedy.

Meanwhile, if managing sales accounts where every last sales contract takes months to land, and contracts last for years, it’s going to be extremely unlikely that you’re going to benefit for a low latency analytics system, the latency lives somewhere else. Similarly, if you’re ONLY using your data for quarterly reports, and there’s no actual business use case for doing it any faster, you probably don’t need a fancy system either.

Notice, this is largely an organizational issue, not a technological one. No amount of speedy analytics can force an org that’s structured like molasses to move like anything but molasses.

Knowing your decision-making cadence helps with costs

Scaled real-time analytics systems tend to be very complicated beasts. A certain amount of scale is implicit because (up to a point) you can do fairly low latency analytics with highly tuned and beefy database. (Yes I’m conflating real-time w/ low latency a bit here.)

Once you hit the limits of what a tuned database can do however, there’s a need for processing streams of incoming data, doing some engineering trickery to bridge the fast short-term data w/ much slower permanent data stores. You’ll need more computing power to handle the growing data volume, you’ll need more network and storage, then people to maintain the systems. It’s a significant investment and you pay a cost in maintaining all that machinery.

Meanwhile, batch jobs can be much simpler, at the extreme a single weak analytics database, a SQL query, and a cron script that sends an email can be all that’s needed to deliver a report on time when you have 8 hours to run the job.

But what about interactive exploration? We need need the low latency for that.

Look, I’ve been an analyst, I get that it sucks to have to wait 10k+ seconds (~2hr 45min!) for a large query of death to come back. But how much money is your organization willing to spend to get that 10k seconds down to 5k seconds? 100s? 1s?

Thanks to diminishing returns, the cost tend to rocket upwards as your time requirements get shorter. There’s usually some low hanging fruit like upgrading to SSDs or newer hardware. But no architecture scales forever, so eventually you’ll have to rip everything apart and put together something new, and that’s costly. So if there’s a business case for it, yeah, fight for the lower latency you need to do your job, to the point where the boss is willing to pay for it.

What if we don’t know we need a real-time analytics system?

YES, this is definitely a thing that could happen. Having more timely analytics can lead to a fundamental change in how the organization operates and all sorts of wonderful unicorns. But access to tons of noisy up-to-date data can also be a distraction. It’s hard to predict from a pure tech point of view what you should do here.

Instead, I’d take a step back. This is a question of about the organizational structure itself. Is it willing, or even capable, of changing if given access to data faster? Are there people in place who have an understanding of how to use the data to make decisions, and can change processes to match? Maybe there’s a use case example from a competitor that they want to imitate?

Throwing tech at an organization that isn’t ready to make use of it rarely works well. So if you’re budgeting to upgrade your analytics infrastructure faster, you might have to budget extra for training on top of it.