Attention: As of January 2024, We have moved to counting-stuff.com. Subscribe there, not here on Substack, if you want to receive weekly posts.

Yes, I know there’s a gazillion posts about the Zillow situation being posted already. Aside from a brief summary for context and references at the end, this week’s post is NOT about what happened AT Zillow, but instead about the discussion that lots of professional numbers people, data scientists, risk managers, finance, quants, etc have to say about and around the situation, which is what I found super fascinating.

The first week of November 2021 was a very exciting time for Data Science. Zillow, a company primarily known for their real estate listings web site declared on their Nov 2nd 3rd quarter filings (available here) that they were winding down their iBuying* business, Zillow Offers. Due to the massive losses accrued, including $304MM in write-downs on properties they’re holding for Q3, and (if I’m reading the financials right) an average about $140k loss per home sold for Q3. (source, Q3 shareholder’s letter, p8 and p14). The consequence wasn’t just the ending of that line of business, but a nearly 25% staffing reduction.

*iBuying is a term for companies that are using the strategy of making an instant (hence the “i”) offer on a house when someone applies using algorithms to set the offer price. If the offer is accepted, the deal is done in cash for the house as-is, with no inspections or contingencies normally associated with a mortgage-backed transaction. Obviously predicting the “true value” of the property is extremely important here.

This, obviously, shocked quite a lot of people and sparked a ton of news articles and discussions on Twitter and the internet in general. As I read the many threads and discussions going by, I started noticing interesting patterns amongst the discussion, which is the true topic of today’s post.

So who are the speakers of interest I’m discussing today?

The group I care about today are “data folk” — data scientists, of course, but also finance people like quants and high frequency traders, people who work in risk management, etc.. It’s the cluster of nerds who have been attracted to the spectacular failure of a data+modeling+execution problem for various reasons and are eager to share opinions (valid or not) from their perspectives.

Obviously, lots of other people unrelated to that data cluster also chimed in on things as news spread, but I’m ignoring those. There plenty of valid discussion about how iBuyers may or may not be inflating real estate prices, as well as discussions about giant institutional landlords to be found there for anyone interested in searching.

Here were the themes that jumped out at me as I watched the threads fly by over the week. There’ll be various links to examples and discussion of each and such further down.

People ragging on Zillow’s DS team in various ways

Data Scientists saying this is a failure in model building and bringing models to production

Data Scientists poking in good natured fun at Sean Taylor, creator of the Prophet library, which had been featured on a Zillow data science job posting

Quants mocking the (assumed) simplicity of Zillow’s model

Quants people chuckling that this is a beginner’s mistake they’ve seen countless times

People who manage risk going “yeah, it happened before and happens all the time”

People, myself included, questioning the processes and structures that let such massive losses mount in the first place

People ragging on the DS team

Given that, at a very high level, the failure of Zillow Offers was because the house price prediction model failed to predict houses accurately enough, to the tune of requiring a $300MM write-off of inventory value in a single quarter, there were people quick to throw the DS team under the bus in various ways.

Below is probably the most prominent of such threads:

I had also spotted (possibly on reddit?, I forget exactly) references to how Zillow Offers had actually hired a few years ago the winner of a Kaggle-like Zillow Price competition for developing a model that priced houses (the winner was announced May 2017). Zillow Offers had started around 2018, so that was probably during the very early period of the business unit.

All of these were essentially calling into question, directly or indirectly, the general competence of the team. If they only knew better, mathed better, whatever, then perhaps they wouldn’t have such a public failure. It’s a very easy place to point fingers since the people responsible for modeling would be the DS team.

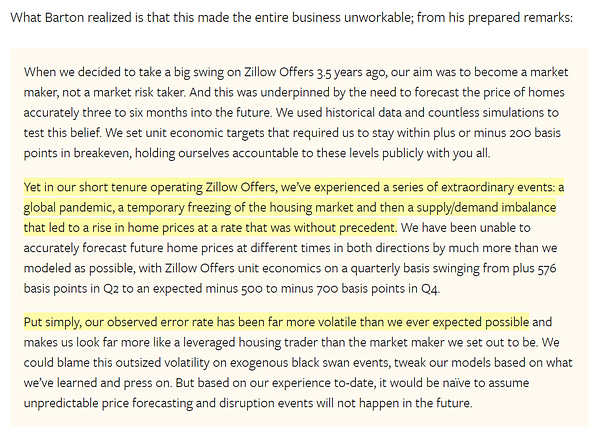

I should note that having listened to the earnings call and Q&A myself, I’m not completely sure the CEO of Zillow threw the DS team under the bus there. There was clear mention the modeling wasn’t performing as expected, but the framing of the decision to wind down the entire business unit was closer to the sentiment of “even if we fix the modeling and operational issues, the volatility of the up/downswings is too much risk for our tastes”.

Data Scientists saying variants of “See? Do better!”

Continuing on the theme from above of “model better, math harder!", but with less judgmental negativity involved, a group of people took the situation as an warning call to data scientists. This was a lesson in how we need to be more rigorous, more careful, overall better, in our modeling work. The view was somewhat mirrored by various financial/risk management people too, in more negative tones since to them those safeguards are fundamental.

Above is a longish, example of such discussion from a data science viewpoint. There’s a lot of talk about testing, validation, and other practical details about modeling and handling the risks (and commensurate care required) involved in deploying a model that would be directly tied to the lifeblood of a company.

I’m completely in favor of everyone taking significantly more care and rigor involved in DS modeling practice. But I also find myself disliking with the core premise of this branch of discussion (not the linked post specifically, which doesn’t come to this conclusion, but the overall sentiment) where if you build a better model, in a better way, things won’t explode as badly. Effectively it’s a technocentric view.

The counterexample is the entire finance industry. It lures some of the brightest minds on the planet to model things expressly in this problem space and even they still see models explode spectacularly on a regular basis. They just do it outside of public view. If anything, the defining difference between them and us is that they’ve been at this for decades and have developed processes and controls completely unrelated to any modeling activity at all to mitigate explosion risks far better than us.

Data Scientists poking fun at other data scientists

With the thread about Prophet going viral, a lot of DS Twitter couldn’t help but poke fun at Sean Taylor, who had created the Prophet library. One example below:

Many joined in and it was just us having fun and everyone involved (including Sean) took it as such.

Quants folk mocking the modeling in various ways

Since Quantitative Finance folk work exactly in the problem space, many had their opinions on the situation, below being one example:

Of course, none of us know any details about the modeling process used, so it’s all open speculation. Even so, the sentiment was generally “here’s amateur hour” in the air. We of course have no idea whether Zillow had hired anyone with quant/trading experience for the data science team, but given the failure no one was going to waste space in a tweet for that sort of nuance anyway.

Quants saying this is a beginner’s mistake

Much less derisive than above, other quants noted that stuff like this happens in their field all the time. It’s perhaps best summed up in the thread from below:

A lot of the simple assumptions that “work” in data science take a very simple view of the world. Those assumptions very often will break in practice. To make things worse, due to the adversarial nature of these transactions, the broken assumptions tend to carry a negative sign (because the counterparty is actively trying to get a better deal for themselves), whereas a lot of naive data science models rely heavily on the fact that our errors cancel out.

From here we get lots of people referencing the lemons problem, or mentioning the broader class of problems under the term “adverse selection”. It’s a very well known and studied problem stemming from information asymmetry within the fields economics and risk management. It’s also something that a random data scientist might not have ever heard of — I, myself, had understood the concept but didn’t know the term “adverse selection” until this week because my background is in philosophy, operations mgmt, and social science. I was conceptualizing it as a form of self-selection bias.

So this discussion across the entire band of data professionals has been a great opportunity to get a taste of how others view the same issue in similar, or sometimes completely different, lenses. I suspect this is why many other data folk had been interested in watching the conversation.

People saying “yeah, this happened before, will happen again”

The history of finance is full of models exploding to disastrous effect. During my childhood, I remember the famous Long Term Capital Management collapse and the subsequent stories and TV documentaries about the event.

The main issue is that people always find new ways to fail to learn from history, and things repeat with minor variations. But perhaps the more interesting bit is the fact that all of us data professionals from all the fields are mixing into a conversation together on Twitter. We have more opportunity to learn about these once domain-specific stories.

Also, in terms of financial explosions go, this was fairly tame. A few hundred millions in losses on a company that’s got other, bigger, revenue streams to sustain it is merely painful and embarrassing. If Zillow wasn’t such a visible brand, few would have taken note.

Questioning the organization and process

Finally there’s a group of people who questioned why this data science team was allowed to rack up losses that could result in such catastrophic consequences. They had over a billion dollars worth of property, were making a few thousand transactions a quarter, and racking up huge losses in terms of both write-downs on their inventory and transactions. All of these would have been clear signs that the model was failing.

Citing NY Times article (paywall), the above thread notes that the CEO had been pushing to expand the business aggressively, starting in Q1 when the coming vaccines had turned the real estate market extremely hot and profitable.

Since Q1 was their ramp-up signal, then their model might’ve been measured against the abnormal growth of the period, leading to pressure to close more deals, and ultimately pushing the model past its breaking point. Then the markets cooled down in Q3, making the estimates for houses recently bought but not resold yet overvalued. It won’t be the first or last time organizational pressure destroys any potential guardrails any data science team can think of.

Others voiced similar concerns too.

Discussion

As you can see, there’s plenty of ways to look at the situation. Since the facts are unavailable to the public, and the only ones who know are either in the executive suite or filling out unemployment forms and polishing their resumes, the whole situation provides a blank canvas for commentary. We have both constructive (for the data community at least) and negative (with all the snarky superior posts). Much like how we can analyze a piece of literature and use the text as a mirror to different aspects of our world, people are using this Zillow situation as a way to reflect onto data science and data practice in general.

In the end, I hope that the greater data community learns a bit more about our different communities value different things — which comes to the forefront when every group talks about the subject. Data scientists can learn a bit about how people who deal with similar risk models view the world, while those same people can also appreciate how many data scientists work in landscapes where the market isn’t adversarial, and thus models can take on a different form without negative consequence.

References I used

The main Twitter thread where I asked people to give their favorite discussion examples. Many of which made it into this post.

Zillow’s Q3 Shareholder’s Call, timestamps for Q&A section that mentioned Zillow Offers

25:55 - Q: What went into the decision? A: Could not predict future prices of homes accurately enough. Too much volatility. Not confidence enough on the market to put capital at risk.

30:55 - Q: How much was the forecasting being optimistic, or was it the market volatility swing. A: Given Q1 performance, they moved to grow. H2 rolls around, but rate of price increase declined significantly and forecast accuracy was lower.

40:10 - Q:What event changed the view from very positive H1 to shutdown in Q3? Q: Big markdown on a small number of units forced them to recognize the inherent risk of the business. believes even if they solved modeling/operational issues eventually, it wouldn’t scale to the many thousands of transactions a month they’d want.

An pretty cogent opinion piece from Bloomberg (soft paywall), essentially arguing that Zillow wanted to be in the business of being a market-maker earning 2% per transaction just earning on the price spread, but instead got leveraged house buying business.

A reddit thread from someone claiming to be ex-Zillow (before the current events), talking about how the Zestimate and Zillow Offers models were separate due to potential legal reasons. There were definitely random people on Twitter speculating how Zillow may have been manipulating the market by tweaking the display of Zestimate, but this is something we’ll never find out without an investigation or legal discovery of some sort.

About this newsletter

I’m Randy Au, currently a Quantitative UX researcher, former data analyst, and general-purpose data and tech nerd. The Counting Stuff newsletter is a weekly data/tech blog about the less-than-sexy aspects about data science, UX research and tech. With occasional excursions into other fun topics.

All photos/drawings used are taken/created by Randy unless otherwise noted.

Curated archive of evergreen posts can be found at randyau.com

Supporting this newsletter:

This newsletter is free, share it with your friends without guilt! But if you like the content and want to send some love, here’s some options:

Tweet me - Comments and questions are always welcome, they often inspire new posts

A small one-time donation at Ko-fi - Thanks to everyone who’s supported me!!! <3