News of the private acquisition of Twitter, the big home of data nerd talk, just landed this Monday. There are concerns and uncertainties about the platform in the future. As someone who’s lived 20 years of social platform implosions, I don’t expect massive change quickly, but I’ll encourage everyone to find each other on multiple platforms to connect, be it Discords, Slacks, newsletters, email, or IRC. If/when people decide to scatter to the winds, you’ll be more likely to hear about any new gathering spaces that way. Make space for each other. If you’d like to share your favorite non-Twitter hangout for data talk, send links to me and I’ll pass them along.

If you ask me what the job of a data analyst involves, I’d say that it involves pulling a narrative out of a collection of data that is supported by the available data. Doing this involves the use of lots of methods and tools, some crude, some sophisticated. But there’s one thing that gets lost in the unending discussion about tools and technology that is a endemic in data science discussions — data analysis is flat out impossible without context.

As I bounced the thought around my brain over the week, it started feeling increasingly important to explore. I’m not sure if this will fundamentally rearrange my view of the practice of working with data, but it does seem to make things that were once puzzling somewhat less so.

Everything depends on context

One obvious aspect about working with data is that math behaves exactly the same no matter what kind of data or context you apply it to. [An oversimplification — people in the back, especially the pure mathematicians with stacks of axioms, can simmer down.] This property of “how numbers work is universal” gives us data practitioners a highly portable toolbox — I can swap as many jobs as I want and while my programming language or domain knowledge might have to change, I won’t have to relearn my math. In terms of being confident in my ability to find future work, it’s a great thing.

But when it comes to the most critical part of analysis, pulling a conclusion or narrative out of data, those math tools are insufficient. Context is what’s necessary for the task. Take this simple chart:

A line that seems to be growing exponentially. Great. But what story can that chart tell you? Nothing. We’d be overjoyed if the line represented how much money we had. We’d be horrified if the line represented how much money we owed to a loan shark. Context makes all the difference.

But maybe you’re shrugging right now. This is elementary stuff we teach everyone who works with data — label your columns because it makes all the difference in interpretation. I’m here to make the argument that managing context, how we destroy, preserve, or transmit it, is utterly central to working with data. We might not think about our actions in that framing, but a lot of our work and our struggles stem from that fact.

A couple of years ago, I had argued that data cleaning is analysis. The crux of that argument was that every step of cleaning data involved making choices that shaped the complete analysis going forward. That’s why it’s impossible to separate “cleaning” from “doing analysis”, despite how many people moan and groan about how tedious cleaning data is, and the near infinite amount of VC money thrown at the data space lately.

This time around, I want to take things one step further and argue that data management is the art of manipulating, destroying, preserving, transmitting context.

Collecting data is inherently context destroying

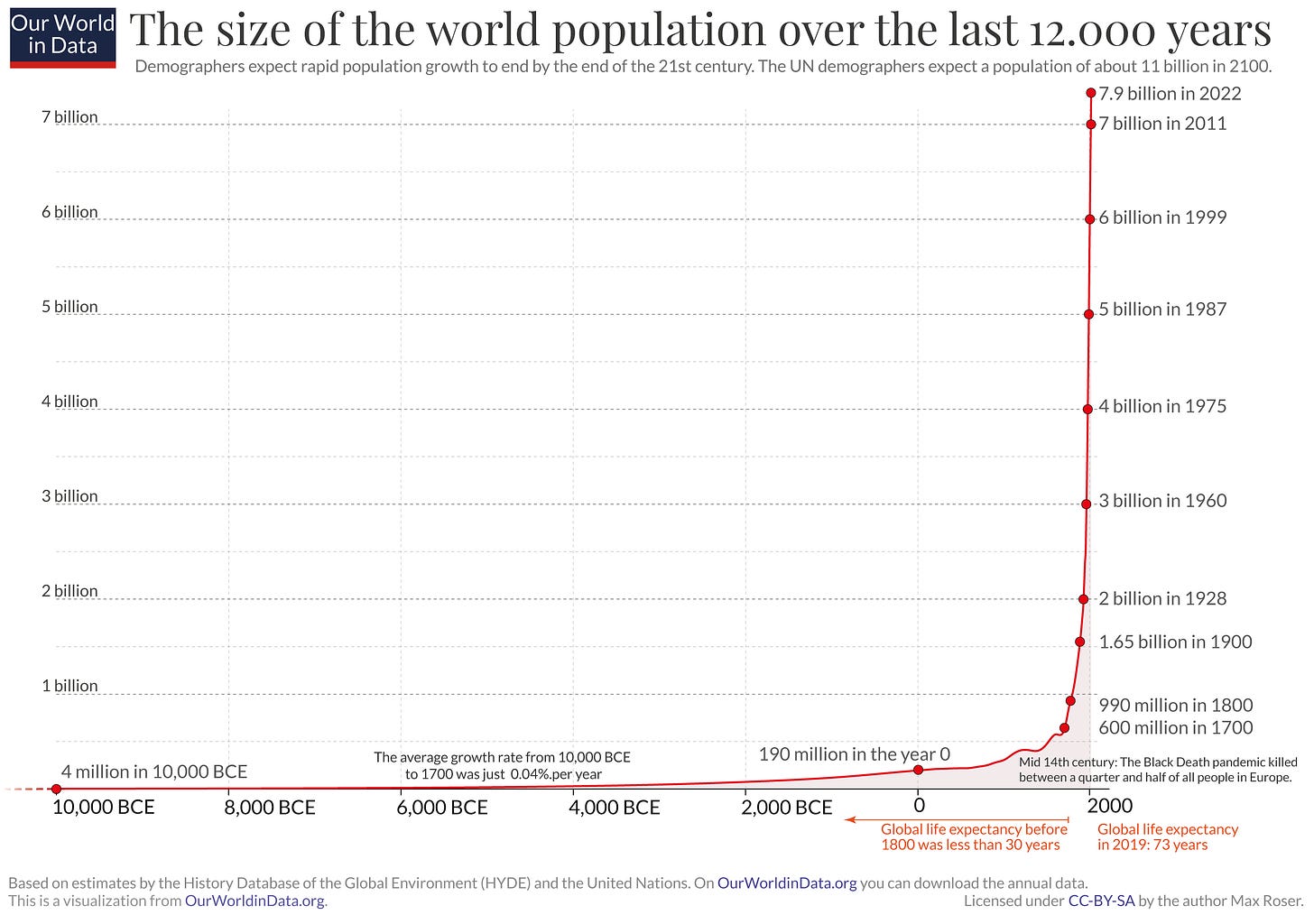

Take a look at the graphic below, a chart of the estimated population of the world since 10,000 BCE. It’s a collection of data that effectively summarizes the past twelve thousand years of human history with a single number series.

Within that single line covers all of human history, every war, disease, birth and death — it throws all of that information, that context, away to tell one story. They even put a tiny comment in the corner to mention that the Black Death was what caused the tiny dip in an otherwise monotonic curve.

The act of building and cleaning a dataset to become “a count the human population on Earth” has discarded all other concerns. With this graphic alone, you’d never even know that wars or agriculture existed. An analyst, working purely and completely from this data, would have very little to say about this data. There’s not much to pull out of it besides a population growth rate, and observing some of the dips and inflections. At best they can speculate that “something happened in recent history” that allowed for a nearly exponential growth.

In order to make data more useful and put together a stronger narrative, an analyst has to bring in context from other sources. Overlay in major inventions like learning to control fire, development of agriculture, advances in medicine, and the industrial revolution. Depending on what narrative and what analysis, the analyst wants to build, they have to layer on different things from different sources.

We can take this even further.

Pull out two coins from your wallet or piggy bank. If you decide to count the two coins together as “two coins”, you’ve already discarded any differences in weight, composition, history of manufacture, and countless other attributes as irrelevant to collect. The act of counting and grouping is, by necessity, context destroying.

Someone who’s collecting coins might distinguish the same coins as being different, one minted in 1999, the other 2010. Another person might choose to differentiate based on some other property like the designs stamped on the coin, or the particular mint that struck the coin. Right from the outset, before we even have data, we’ve already made important decisions as to what “counts” as being counted. That’s the first bit of context associated with our data.

Data collection is just the start of the context manipulation situation

It's important to know what data is being collected and how it is collected because that gives vital information about what you can and can't say with a given data set. Identifying biases within a data set often starts here.

But once data is collected, there are still many more places where we must manipulate the context. Every step of aggregation or deleting fields would obviously remove context from the data set. All those decisions becomes embedded within our resulting data set and we need to communicate those decisions downstream.

But not all data work is about destruction. We can also add context via joining in other data sets or just overlaying context in parallel. Think about all those fact tables we have, or how that population data overlaid the Black Death to explain a dip. We're trained to leave things like join keys and unique identifiers in our data specifically to enable this sort of operation, but it’s not even a hard requirement that different bits of data “join” cleanly.

Context management gets extremely difficult

This far I've been mentioning two extremely basic operations, removing context and adding context. Both are so fundamental to working with data that we have a whole host of names and techniques for them. It’s “baby stuff”. Why do I find such basic stuff interesting?

Because this framework helps explain why building a data warehouse can be so hard. In my experience, building a DW is hard primarily because of two things — getting disparate systems with incompatible context schema to agree takes a ton of work, and also predicting the future by picking what to aggregate ahead of time for a data cube (aka choosing what context is okay to destroy) is extremely hard. Most other issues stem from these two roots.

In this instance, when I say “incompatible context schema” being difficult I'm not only thinking about the simple raw field names and identifiers. Different systems can record things with conflicting concepts. In the two coins example, finance might treat all coins the same because they care about nominal currency value, while an inventory system might consider the two coins different collector's items with vastly different values. Finding some functioning middle ground that links the two together is a challenge.

Meanwhile, the framework also puts a light on why building data cubes is hard. It’s not simply because it’s difficult to predict what people will want to do with a given precomputed aggregation, but there’s a bigger worry about cutting off certain other important analyses based on what information gets destroyed. The fear of breaking things can cause decision paralysis that can send people off into many iterations of trial and error figuring things out.

The hardest context to manage is the stuff that’s not recorded as part of the data

From the specifics of the data collection procedure, to all the historical side-narratives that run parallel to a data set, there’s lots of important context that lives “outside” the data set. We often call this stuff “domain knowledge” and we can’t work without it. I hope it’s no surprise that I’m a really big proponent that analysts need to know their data before they can effectively work on it.

Passing this context along is the “Last Boss” of data work. It involves a lot of documentation work that few people want to do. All those hours spent writing up careful notes, articulating assumptions, diagraming all the states that complicated processes can take, can all disappear with the next code release. This leads to the situation we’re all too familiar with — lots of this domain knowledge becomes tacit and eventually lost.

Even if documentation were updated religiously by everyone, it also requires people to actually read the documentation (gasp!) that is already produced. How many of us have actually read the handbooks for data users of the US Census data? I certainly haven’t. This presents a whole different set of issues which have nothing to do with actually doing analysis, but the process of preparing to do analysis.

Working with context isn’t just one group’s job

Finally, practically everyone in an organization has a hand in owning or providing context to data. Obviously the data scientists and researchers that directly collect and make use of the data will correspondingly spend the most time working on maintaining the collection of data and associated contexts. But there’s also all the folk who generate data as part of their activities — engineers, sales, marketers, support folk, etc.. There’s qualitative researchers who can go out and get qualitative context to shed light on what the data is actually saying. There’s historians who have seen this data develop over time and can point to issues and trends. Plus so many more.

While I’m sure that many of us already know the value of pulling in context from other people and fields to round out our findings, this isn’t immediately clear to non data folk. After all, what could be more definitive than numbers? The sooner we realize that we’re all working on this giant storytelling endeavor together, the easier it will be for all of us.

Standing offer: If you created something and would like me to review or share it w/ the data community — my mailbox and Twitter DMs are open.

About this newsletter

I’m Randy Au, Quantitative UX researcher, former data analyst, and general-purpose data and tech nerd. Counting Stuff is a weekly newsletter about the less-than-sexy aspects of data science, UX research and tech. With excursions into other fun topics.

Curated archive of evergreen posts can be found at randyau.com

All photos/drawings used are taken/created by Randy unless otherwise noted.

Supporting this newsletter:

This newsletter is free, share it with your friends without guilt! But if you like the content and want to send some love, here’s some options:

Tweet me - Comments and questions are always welcome, they often inspire new posts

A small one-time donation at Ko-fi - Thanks to everyone who’s sent a small donation! I read every single note!

If shirts and swag are more your style there’s some here - There’s a plane w/ dots shirt available!

So very true. I work with publically available data, and I find myself very often trying to guess at the "why was this data collected?". It would be oh so very helpful if, in addition to the data dictionary (though even that is often missing), a short explanation of why the data was collected was documented. That could explain a lot of what I see as oddities.

I worked with local crime incident data once. As I went through cleanup, I realised that some incidents were from far away places, other states even. That's when I realized that the data was not gathered to track crime, but to track cops responses to incidents - a subtle but important difference. It was more a management tool for chiefs to track what their people were doing, not a tool to track crime.